Hello,

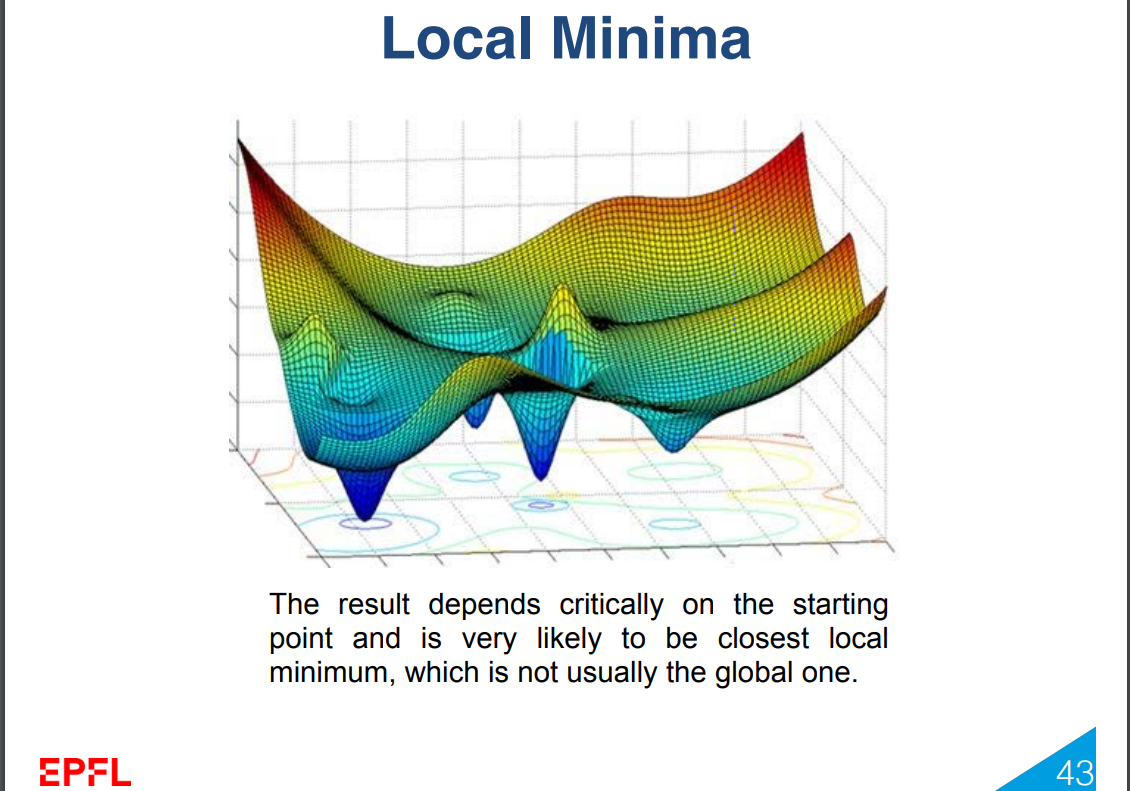

I'm going over the lectures and noticed something in week 5, about optimization. At slide page 43, we were given an image of a function, let's take that for example. I was wondering for such non-convex functions, could we do the following:

Step 1: Using Gradient Descent, find a local minima.

Step 2: "Slice" the function with a (hyper)plane at that point. For example, if the local minima gives us the value z = 6, slice the function at z = 6.

Step 3: Find the first intersection between this new (hyper)plane and the function that's different from the initial minima.

Step 4: Initiate a second Gradient Descent at this new point.

Step 5: Repeat until there are no intersections left except the latest minima, or if the function keeps bouncing between the same set of values(assuming it tests all other possible values before bouncing back to the initial value who sliced the function at that given point).

To human eye, it seems very intuitional: Just keep abandoning the "upper half" of the function and keep evaluating new gradient descents until you hit a single value which will be the global minimum or find multiple such values. However, to find the intersection we'd on first thought need to test all the values of the function one by one, which if we do anyways we could just keep the minimum in a variable that we update every time we find a smaller point, and achieve the same result but much faster.

Thus, I was wondering if there is a specific algorithm that uses a similar procedure to find global extrema on non-convex functions with a better time complexity than this simple process I mentioned.

Thank you for your help and best regards,

Derin Arda Alpay.