Instructor's notes - before lecture

What this course is about: networked computers as tools for social self-organization.

Computers are powerful tools enabling people to communicate, cooperate, deliberate, make decisions, and innovate in a multitude of ways. Some uses:

Communication: Alice sends Bob an E-mail or makes a conference call.

Association: a set of users form an online discussion group or forum.

Cooperation: users work together to write or design something together.

Curation: users finding, filtering, vetting, organizing relevant content.

Deliberation: users employ formal processes to debate issues or policy.

Decision: users employ social choice (e.g., voting) to decide collectively.

Organization: users form organizations/societies, possibly decentralized.

Governance: users employ processes to govern membership, behavior.

Incentives: digital payments, cryptocurrencies, finance and investment.

Evolution: collectively finding and introducing better ways to self-organize.

Promises of computing and networking technology as a “democratizing” force:

Inclusiveness:

Borderless communication with anyone anywhere on Earth

Access to information for “everyone”

Ability to collaborate productively with anyone globally

Ability to associate with like-minded people anywhere

Support for individual freedoms:

Speech: privacy, anonymity supports expression of true sentiment

Information: access to [good?] information from anywhere

Association: find, form groups with like-minded people anywhere

Press: anyone can publish blogs, social media, etc.

Resistance to control, censorship by entrenched authorities

Internet as a tool to “route around” failures, censorship

Cryptocurrencies as tools for bottom-up economic empowerment

But these tools sometimes succeed, but often fail to serve people in a multitude of ways, either due to flaws in the abstraction or flaws in the implementation.

Abstraction flaws and challenge examples:

Unscalable communication or human attention costs: e.g., broadcast communication, or “pure” direct democracy.

Naive cooperation designs O(n2), fail to scale.

Unreliable or misbehaving human participants: e.g., spam, trolling, sock puppetry, harassment, fake news, fake reviews.

The design of the platform and process greatly affects the behavior of its users - so how to design properly?

Who should “police” a community, and how? Government, platform operator employees, community self-policing?

Unintended effects of algorithms: e.g., polarization, radicalization

Social recommendation/newsfeed systems, echo chamber

Interaction-maximizing and click-maximizing algorithms and anger-exploiting media (e.g., YouTube).

Bias, both real and perceived, from self-selecting online groups

Tribalism, mistrust of information from other ideologies

Information relativism: lack of objective notion of “truth”

Overcompression of noisy, unreliable, manipulable information.

Large-scale campaigns, “beauty contest” elections.

Marketing-driven vs “efficient” markets, lemon markets.

Accountability of large [government] bureaucracies to electorate via the bottleneck of representation.

The competence- and expertise-identification problem

Populace choosing experts: may be poor judges

Experts choosing experts: unaccountable, may gradually become insular and fragmentation-prone.

Funding/incentivizing the production, curation of information

Free information vs monetization, copyright, DRM

Social media undermining traditional business models

Who bears the cost of information review and policing?

Users? Private platform operators? Governments?

Implementation flaws and challenges:

Network security, availability, resilience; “routing around” failures.

The upsides (resilience) and downsides (spam)

Identity and personhood problem: weak or strong digital identities; security, privacy, and coercion, Sybil and sock-puppet attacks.

The tension between anonymity (for freedom) versus accountability (for civility).

Individual misbehavior versus Sybil amplification.

Software bugs, exploitable flaws.

Bitcoin wallets as a universal bug bounty.

Smart contract bugs: e.g., DAO, Fomo3D attacks.

Fairness challenges:

Investment-centric versus democratic stake models, the problem of “rich get richer” and power centralization

Corporations versus governments, foundations

Proof-of-work/stake/etc versus PoP

Inclusion challenges:

Digital divide: access to/expense of devices, connectivity.

Deliberate exclusion: disenfranchisement, guardianship.

Effective exclusion via trolling, harassment.

Identity attributes, personal data, coercion, black market.

Goals: What would we like to achieve? What might our long-term end goals be?

Technology enabling and incentivizing civil bottom-up self-organization.

Ensure freedoms of expression and association but with accountability.

Enable attention-limited users to obtain the (good) information they need to participate fully as time and interests permit, avoiding polarization.

Give communities not just voting but effective and scalable deliberation, agenda-setting, and long-term governance evolution processes.

Particular social/technical tools and techniques this course will explore:

Basic fault analysis techniques applying to failures and compromises.

Network/graph algorithms for communication, and social/trust networks.

Content filtering, recommendation, curation, and peer review processes.

Social choice: election methods, sampling, direct and liquid democracy.

Deliberation processes: peer review, online deliberation, agenda-setting

Techniques to achieve privacy and anonymity with accountability: e.g., verifiable shuffles, anonymous credentials, zero-knowledge proofs, PoP.

Deployed technologies we will look at, exploring their promise and flaws:

USENET (historical) and other public discussion forums.

News media: both traditional and social media platforms.

Deliberation: applications like Loomio and Liquid Feedback

Peer review: Slashdot, Reddit, GitHub, HotCRP

Cryptocurrencies and smart contracts: Bitcoin, Ethereum.

Organizations and decentralized autonomous organizations (DAOs).

Course logistics

TAs introduction

Content: Readings, lectures, quizzes, occasional hands-on exercises

Some may involve light programming in various languages, but not programming-intensive.

Sessions: approx 5 hours per week total expected time commitment

Lecture: attendance normally expected and required

Will make provisions to ensure that missing one or two won’t seriously hurt grade, provided you catch up via colleagues

Discussion-oriented, normally blackboard-based, no slides

Exercises: need to attend only as directed in assigned exercises

TAs will present to help with assigned exercises

Practical work: purely-optional time/space for reading, discussion

Instructors will not be present

Grading

Approx 50% - quizzes and exercises during semester

Approx 50% - final exam

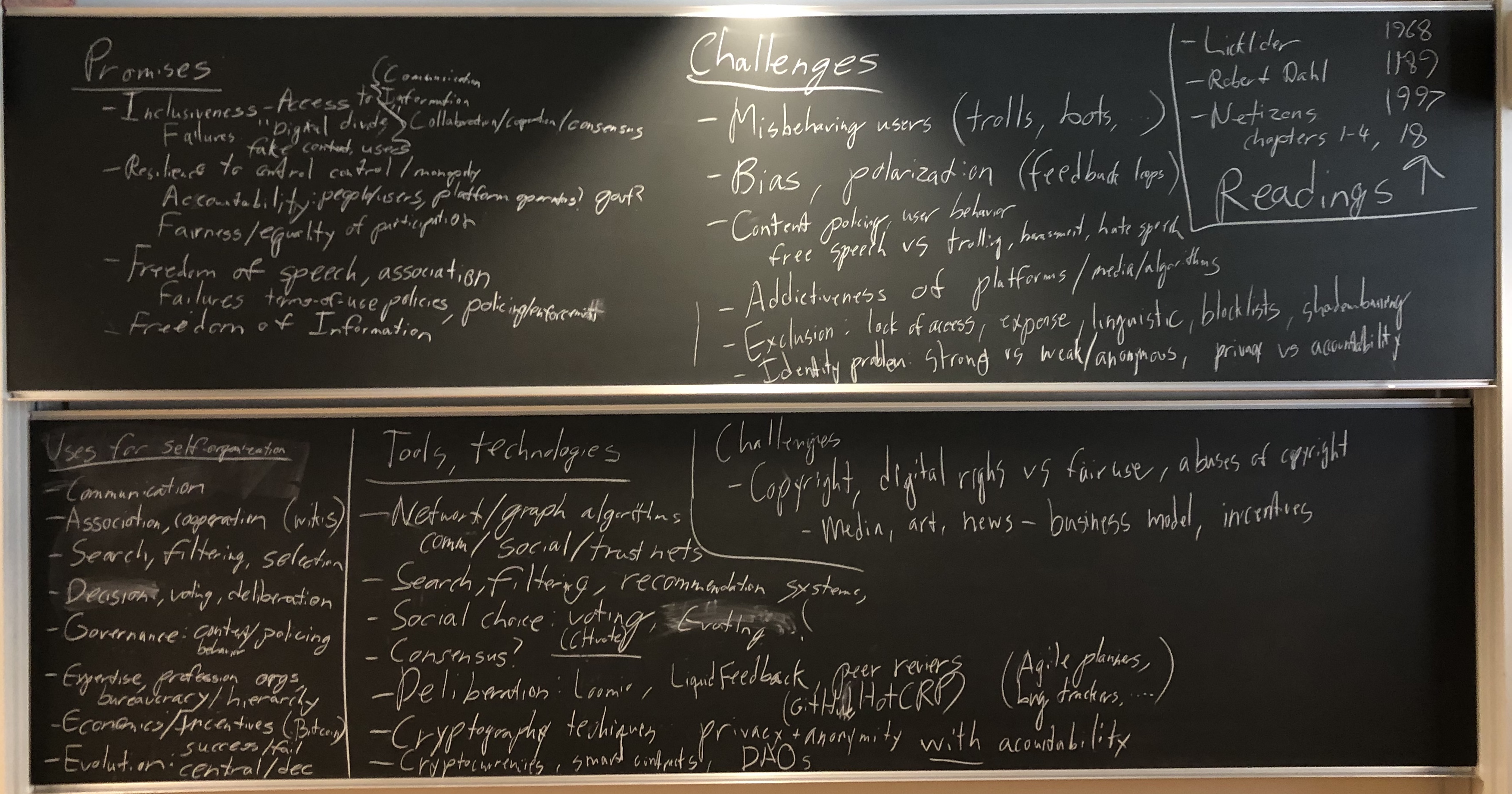

Post-lecture blackboard snapshot from 2019: