Hi,

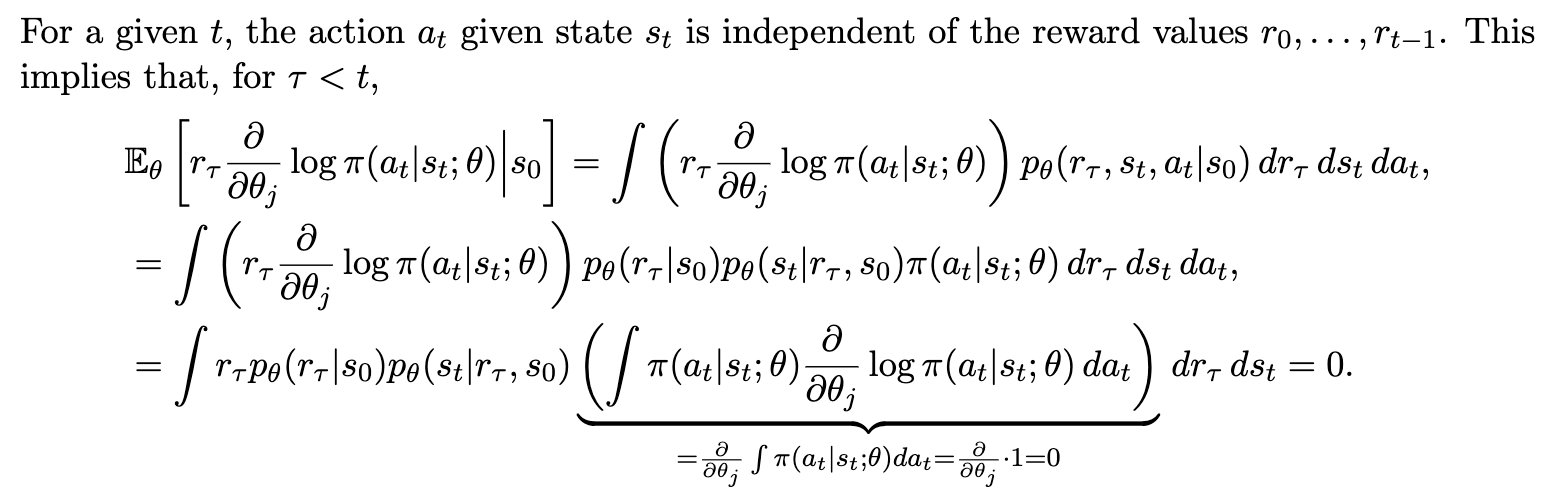

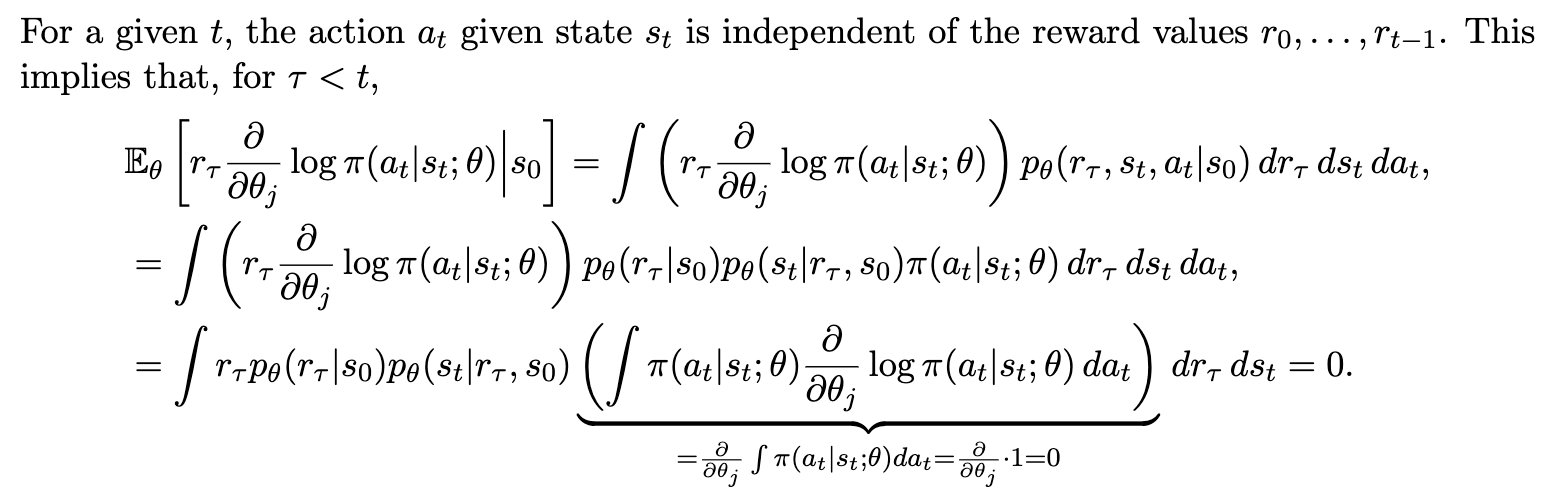

both the derivation in the class and in the exercise are valid ways to derive the batch rule for the policy gradient algorithm. The intention behind the exercise was to make you think about the derivation from a different angle: without explicitly using the Bellman equation, we can derive the batch rule directly from the multi-step return by writing out the integral over all possible state-action sequences. However, note that we use the same idea underlying the Bellman equation when we write the following (i.e. that a given state and action choice only depends on the future rewards):

both the derivation in the class and in the exercise are valid ways to derive the batch rule for the policy gradient algorithm. The intention behind the exercise was to make you think about the derivation from a different angle: without explicitly using the Bellman equation, we can derive the batch rule directly from the multi-step return by writing out the integral over all possible state-action sequences. However, note that we use the same idea underlying the Bellman equation when we write the following (i.e. that a given state and action choice only depends on the future rewards):

In the approach on the blackboard, when we write that we 'iteratively expand' the value estimate (since it also depends on the policy, so we need to apply the log-likelihood trick there as well), we effectively sum over the entire path in the episode, similar to the path integral that we write in the exercise.

Hope it's more clear now.

Hope it's more clear now.