Hi,

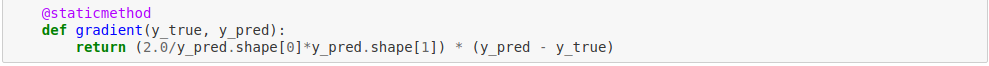

I do have a problem with the homework 7 correction. It is stated in the figure below the formula for the loss function gradient.

![]()

But its implementation describes another formula:

Unless I'm mistaken, it's equal to (2.0/N)*K * (Y - Ŷ).

Also, it's very surprising that this formula above gives better result for MNIST test than when we follow the first formula. Do you know why ?

Thank you in advance for your answers,

Best,

Guilaume