Hi, I have a couple of questions on 2 lectures,

- Lecture 8:

- Why do we need a correction weight when using prioritized replay ?

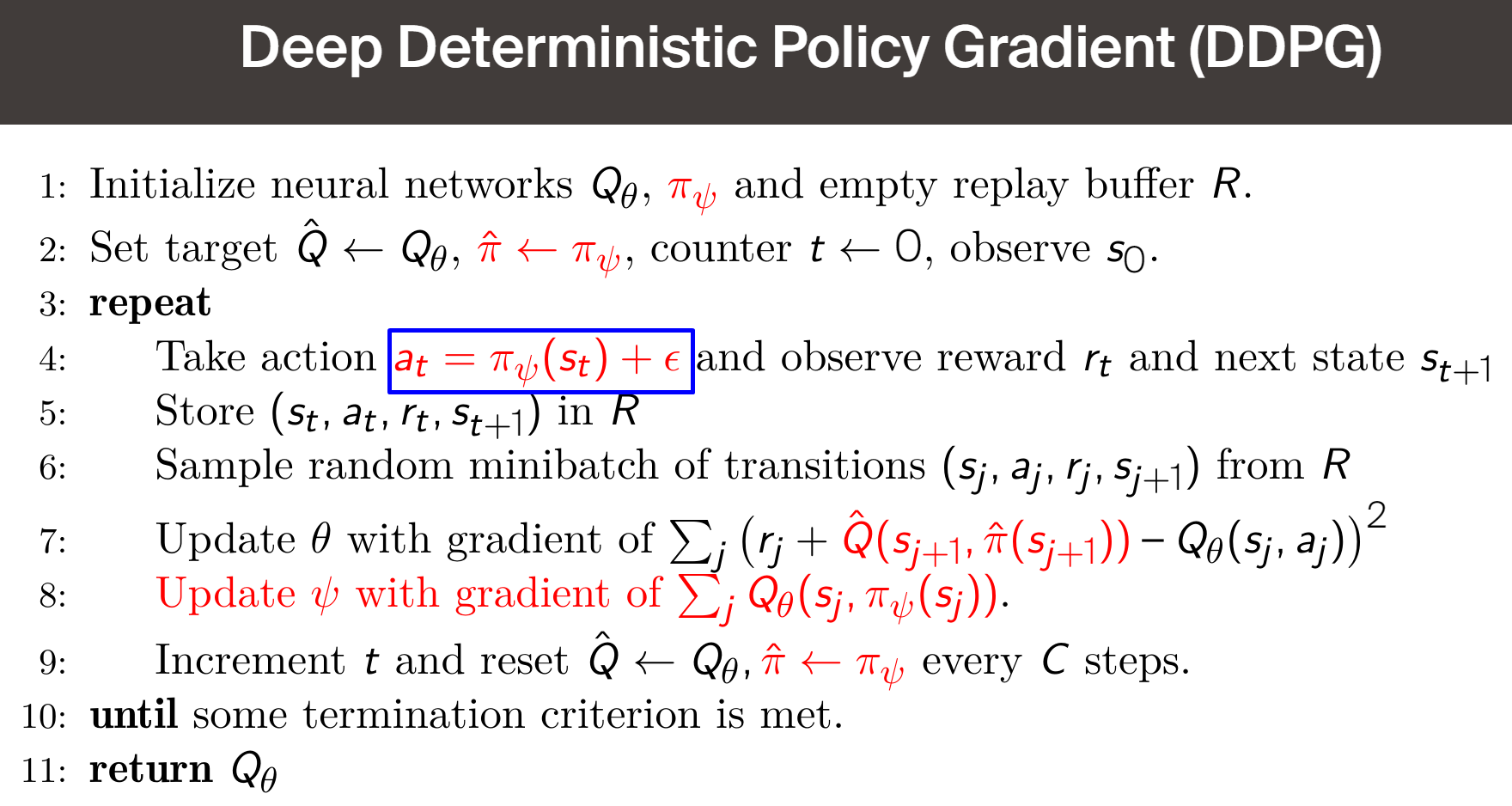

- About the difference between on-policy and off-policy methods. From what I understood, off-policy methods update a different policy that the one used to generate data. Furthemore, according to lecture 8 we cannot naively use replay-buffer for on-policy methods. I expect the DDPG algorithm to be on-policy as it uses the policy network during both exploration and when updating the weights. Why does using replay-buffer in that situation work well ?

- Lecture 3: Does stationary

means that the policy pi(a|s) has converged? In the formula for

means that the policy pi(a|s) has converged? In the formula for  there should be

there should be  instead of

instead of  right ?

right ?